iterative set explainer with (gibbs, GAN or other samples generator) or proxy predictor algorithm to get as close as we can to final prediction score with lowest variance with the smallest set as possible of varaibles More...

#include <ExplainWrapper.h>

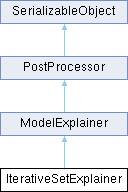

Inheritance diagram for IterativeSetExplainer:

Public Member Functions | |

| void | _learn (const MedFeatures &train_mat) |

| overload function for ModelExplainer - easier API | |

| void | explain (const MedFeatures &matrix, vector< map< string, float > > &sample_explain_reasons) const |

| Virtual - return explain results in sample_feature_contrib. | |

| void | post_deserialization () |

| void | load_GIBBS (MedPredictor *original_pred, const GibbsSampler< float > &gibbs, const GibbsSamplingParams &sampling_args) |

| void | load_GAN (MedPredictor *original_pred, const string &gan_path) |

| void | load_MISSING (MedPredictor *original_pred) |

| void | load_sampler (MedPredictor *original_pred, unique_ptr< SamplesGenerator< float > > &&generator) |

| void | dprint (const string &pref) const |

Public Member Functions inherited from ModelExplainer Public Member Functions inherited from ModelExplainer | |

| virtual int | init (map< string, string > &mapper) |

| Global init for general args in all explainers. initialize directly all args in GlobalExplainerParams. | |

| virtual int | update (map< string, string > &mapper) |

| Virtual to update object from parsed fields. | |

| virtual void | Learn (const MedFeatures &train_mat) |

| Learns from predictor and train_matrix (PostProcessor API) | |

| void | Apply (MedFeatures &matrix) |

| alias for explain | |

| void | get_input_fields (vector< Effected_Field > &fields) const |

| List of fields that are used by this post_processor. | |

| void | get_output_fields (vector< Effected_Field > &fields) const |

| List of fields that are being effected by this post_processor. | |

| void | init_post_processor (MedModel &model) |

| Init ModelExplainer from MedModel - copies predictor pointer, might save normalizers pointers. | |

| virtual void | explain (MedFeatures &matrix) const |

| Stores explain results in matrix. | |

| void | dprint (const string &pref) const |

Public Member Functions inherited from PostProcessor Public Member Functions inherited from PostProcessor | |

| void * | new_polymorphic (string dname) |

| for polymorphic classes that want to be able to serialize/deserialize a pointer * to the derived class given its type one needs to implement this function to return a new to the derived class given its type (as in my_type) | |

| virtual float | get_use_p () |

| virtual int | get_use_split () |

Public Member Functions inherited from SerializableObject Public Member Functions inherited from SerializableObject | |

| virtual int | version () const |

| Relevant for serializations. | |

| virtual string | my_class_name () const |

| For better handling of serializations it is highly recommended that each SerializableObject inheriting class will implement the next method. | |

| virtual void | serialized_fields_name (vector< string > &field_names) const |

| The names of the serialized fields. | |

| virtual void | pre_serialization () |

| virtual size_t | get_size () |

| Gets bytes sizes for serializations. | |

| virtual size_t | serialize (unsigned char *blob) |

| Serialiazing object to blob memory. return number ob bytes wrote to memory. | |

| virtual size_t | deserialize (unsigned char *blob) |

| Deserialiazing blob to object. returns number of bytes read. | |

| size_t | serialize_vec (vector< unsigned char > &blob) |

| size_t | deserialize_vec (vector< unsigned char > &blob) |

| virtual size_t | serialize (vector< unsigned char > &blob) |

| virtual size_t | deserialize (vector< unsigned char > &blob) |

| virtual int | read_from_file (const string &fname) |

| read and deserialize model | |

| virtual int | write_to_file (const string &fname) |

| serialize model and write to file | |

| virtual int | read_from_file_unsafe (const string &fname) |

| read and deserialize model without checking version number - unsafe read | |

| int | init_from_string (string init_string) |

| Init from string. | |

| int | init_params_from_file (string init_file) |

| int | init_param_from_file (string file_str, string ¶m) |

| int | update_from_string (const string &init_string) |

| virtual string | object_json () const |

Data Fields | |

| GeneratorType | gen_type = GeneratorType::GIBBS |

| generator type | |

| string | generator_args = "" |

| for learn | |

| string | sampling_args = "" |

| args for sampling | |

| int | n_masks = 100 |

| how many test to conduct from shapley | |

| bool | use_random_sampling = true |

| If True will use random sampling - otherwise will sample mask size and than create it. | |

| float | missing_value = MED_MAT_MISSING_VALUE |

| missing value | |

| float | sort_params_a |

| weight for minimal distance from original score importance | |

| float | sort_params_b |

| weight for variance in prediction using imputation. the rest is change from prev | |

| float | sort_params_k1 |

| weight for minimal distance from original score importance | |

| float | sort_params_k2 |

| weight for variance in prediction using imputation. the rest is change from prev | |

| int | max_set_size |

| the size to look for to explain | |

Data Fields inherited from ModelExplainer Data Fields inherited from ModelExplainer | |

| MedPredictor * | original_predictor = NULL |

| predictor we're trying to explain | |

| ExplainFilters | filters |

| general filters of results | |

| ExplainProcessings | processing |

| processing of results, like groupings, COV | |

| GlobalExplainerParams | global_explain_params |

Data Fields inherited from PostProcessor Data Fields inherited from PostProcessor | |

| PostProcessorTypes | processor_type = PostProcessorTypes::FTR_POSTPROCESS_LAST |

| int | use_split = -1 |

| float | use_p = 0.0 |

Additional Inherited Members | |

Static Public Member Functions inherited from ModelExplainer Static Public Member Functions inherited from ModelExplainer | |

| static void | print_explain (MedSample &smp, int sort_mode=0) |

Static Public Member Functions inherited from PostProcessor Static Public Member Functions inherited from PostProcessor | |

| static PostProcessor * | make_processor (const string &processor_name, const string ¶ms="") |

| static PostProcessor * | make_processor (PostProcessorTypes type, const string ¶ms="") |

| static PostProcessor * | create_processor (string ¶ms) |

Detailed Description

iterative set explainer with (gibbs, GAN or other samples generator) or proxy predictor algorithm to get as close as we can to final prediction score with lowest variance with the smallest set as possible of varaibles

Member Function Documentation

◆ _learn()

|

virtual |

overload function for ModelExplainer - easier API

Implements ModelExplainer.

◆ dprint()

|

virtual |

Reimplemented from PostProcessor.

◆ explain()

|

virtual |

Virtual - return explain results in sample_feature_contrib.

Implements ModelExplainer.

◆ post_deserialization()

|

virtual |

Reimplemented from SerializableObject.

The documentation for this class was generated from the following files:

- Internal/MedProcessTools/MedProcessTools/ExplainWrapper.h

- Internal/MedProcessTools/MedProcessTools/ExplainWrapper.cpp