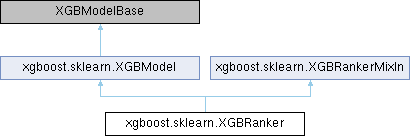

Inheritance diagram for xgboost.sklearn.XGBRanker:

Public Member Functions | |

| __init__ (self, *str objective="rank:ndcg", **Any kwargs) | |

| "XGBRanker" | fit (self, ArrayLike X, ArrayLike y, *Optional[ArrayLike] group=None, Optional[ArrayLike] qid=None, Optional[ArrayLike] sample_weight=None, Optional[ArrayLike] base_margin=None, Optional[Sequence[Tuple[ArrayLike, ArrayLike]]] eval_set=None, Optional[Sequence[ArrayLike]] eval_group=None, Optional[Sequence[ArrayLike]] eval_qid=None, Optional[Union[str, Sequence[str], Metric]] eval_metric=None, Optional[int] early_stopping_rounds=None, Optional[Union[bool, int]] verbose=False, Optional[Union[Booster, str, XGBModel]] xgb_model=None, Optional[Sequence[ArrayLike]] sample_weight_eval_set=None, Optional[Sequence[ArrayLike]] base_margin_eval_set=None, Optional[ArrayLike] feature_weights=None, Optional[Sequence[TrainingCallback]] callbacks=None) |

| ArrayLike | predict (self, ArrayLike X, bool output_margin=False, bool validate_features=True, Optional[ArrayLike] base_margin=None, Optional[Tuple[int, int]] iteration_range=None) |

| ArrayLike | apply (self, ArrayLike X, Optional[Tuple[int, int]] iteration_range=None) |

| float | score (self, ArrayLike X, ArrayLike y) |

Public Member Functions inherited from xgboost.sklearn.XGBModel Public Member Functions inherited from xgboost.sklearn.XGBModel | |

| bool | __sklearn_is_fitted__ (self) |

| Booster | get_booster (self) |

| "XGBModel" | set_params (self, **Any params) |

| Dict[str, Any] | get_params (self, bool deep=True) |

| Dict[str, Any] | get_xgb_params (self) |

| int | get_num_boosting_rounds (self) |

| None | save_model (self, Union[str, os.PathLike] fname) |

| None | load_model (self, ModelIn fname) |

| Dict[str, Dict[str, List[float]]] | evals_result (self) |

| int | n_features_in_ (self) |

| np.ndarray | feature_names_in_ (self) |

| float | best_score (self) |

| int | best_iteration (self) |

| np.ndarray | feature_importances_ (self) |

| np.ndarray | coef_ (self) |

| np.ndarray | intercept_ (self) |

Data Fields | |

| objective | |

| eval_metric | |

Data Fields inherited from xgboost.sklearn.XGBModel Data Fields inherited from xgboost.sklearn.XGBModel | |

| n_estimators | |

| objective | |

| max_depth | |

| max_leaves | |

| max_bin | |

| grow_policy | |

| learning_rate | |

| verbosity | |

| booster | |

| tree_method | |

| gamma | |

| min_child_weight | |

| max_delta_step | |

| subsample | |

| sampling_method | |

| colsample_bytree | |

| colsample_bylevel | |

| colsample_bynode | |

| reg_alpha | |

| reg_lambda | |

| scale_pos_weight | |

| base_score | |

| missing | |

| num_parallel_tree | |

| random_state | |

| n_jobs | |

| monotone_constraints | |

| interaction_constraints | |

| importance_type | |

| device | |

| validate_parameters | |

| enable_categorical | |

| feature_types | |

| max_cat_to_onehot | |

| max_cat_threshold | |

| multi_strategy | |

| eval_metric | |

| early_stopping_rounds | |

| callbacks | |

| kwargs | |

| n_classes_ | |

| evals_result_ | |

Protected Member Functions | |

| DMatrix | _create_ltr_dmatrix (self, Optional[DMatrix] ref, ArrayLike data, ArrayLike qid, **Any kwargs) |

Protected Member Functions inherited from xgboost.sklearn.XGBModel Protected Member Functions inherited from xgboost.sklearn.XGBModel | |

| Dict[str, bool] | _more_tags (self) |

| str | _get_type (self) |

| None | _load_model_attributes (self, dict config) |

| Tuple[ Optional[Union[Booster, str, "XGBModel"]], Optional[Metric], Dict[str, Any], Optional[int], Optional[Sequence[TrainingCallback]],] | _configure_fit (self, Optional[Union[Booster, "XGBModel", str]] booster, Optional[Union[Callable, str, Sequence[str]]] eval_metric, Dict[str, Any] params, Optional[int] early_stopping_rounds, Optional[Sequence[TrainingCallback]] callbacks) |

| DMatrix | _create_dmatrix (self, Optional[DMatrix] ref, **Any kwargs) |

| None | _set_evaluation_result (self, TrainingCallback.EvalsLog evals_result) |

| bool | _can_use_inplace_predict (self) |

| Tuple[int, int] | _get_iteration_range (self, Optional[Tuple[int, int]] iteration_range) |

Protected Attributes | |

| _Booster | |

Protected Attributes inherited from xgboost.sklearn.XGBModel Protected Attributes inherited from xgboost.sklearn.XGBModel | |

| _Booster | |

Additional Inherited Members | |

Static Protected Attributes inherited from xgboost.sklearn.XGBRankerMixIn Static Protected Attributes inherited from xgboost.sklearn.XGBRankerMixIn | |

| str | _estimator_type = "ranker" |

Constructor & Destructor Documentation

◆ __init__()

| xgboost.sklearn.XGBRanker.__init__ | ( | self, | |

| *str | objective = "rank:ndcg", |

||

| **Any | kwargs | ||

| ) |

Reimplemented from xgboost.sklearn.XGBModel.

Member Function Documentation

◆ apply()

| ArrayLike xgboost.sklearn.XGBRanker.apply | ( | self, | |

| ArrayLike | X, | ||

| Optional[Tuple[int, int]] | iteration_range = None |

||

| ) |

Return the predicted leaf every tree for each sample. If the model is trained

with early stopping, then :py:attr:`best_iteration` is used automatically.

Parameters

----------

X : array_like, shape=[n_samples, n_features]

Input features matrix.

iteration_range :

See :py:meth:`predict`.

Returns

-------

X_leaves : array_like, shape=[n_samples, n_trees]

For each datapoint x in X and for each tree, return the index of the

leaf x ends up in. Leaves are numbered within

``[0; 2**(self.max_depth+1))``, possibly with gaps in the numbering.

Reimplemented from xgboost.sklearn.XGBModel.

◆ fit()

| "XGBRanker" xgboost.sklearn.XGBRanker.fit | ( | self, | |

| ArrayLike | X, | ||

| ArrayLike | y, | ||

| *Optional[ArrayLike] | group = None, |

||

| Optional[ArrayLike] | qid = None, |

||

| Optional[ArrayLike] | sample_weight = None, |

||

| Optional[ArrayLike] | base_margin = None, |

||

| Optional[Sequence[Tuple[ArrayLike, ArrayLike]]] | eval_set = None, |

||

| Optional[Sequence[ArrayLike]] | eval_group = None, |

||

| Optional[Sequence[ArrayLike]] | eval_qid = None, |

||

| Optional[Union[str, Sequence[str], Metric]] | eval_metric = None, |

||

| Optional[int] | early_stopping_rounds = None, |

||

| Optional[Union[bool, int]] | verbose = False, |

||

| Optional[Union[Booster, str, XGBModel]] | xgb_model = None, |

||

| Optional[Sequence[ArrayLike]] | sample_weight_eval_set = None, |

||

| Optional[Sequence[ArrayLike]] | base_margin_eval_set = None, |

||

| Optional[ArrayLike] | feature_weights = None, |

||

| Optional[Sequence[TrainingCallback]] | callbacks = None |

||

| ) |

Fit gradient boosting ranker

Note that calling ``fit()`` multiple times will cause the model object to be

re-fit from scratch. To resume training from a previous checkpoint, explicitly

pass ``xgb_model`` argument.

Parameters

----------

X :

Feature matrix. See :ref:`py-data` for a list of supported types.

When this is a :py:class:`pandas.DataFrame` or a :py:class:`cudf.DataFrame`,

it may contain a special column called ``qid`` for specifying the query

index. Using a special column is the same as using the `qid` parameter,

except for being compatible with sklearn utility functions like

:py:func:`sklearn.model_selection.cross_validation`. The same convention

applies to the :py:meth:`XGBRanker.score` and :py:meth:`XGBRanker.predict`.

+-----+----------------+----------------+

| qid | feat_0 | feat_1 |

+-----+----------------+----------------+

| 0 | :math:`x_{00}` | :math:`x_{01}` |

+-----+----------------+----------------+

| 1 | :math:`x_{10}` | :math:`x_{11}` |

+-----+----------------+----------------+

| 1 | :math:`x_{20}` | :math:`x_{21}` |

+-----+----------------+----------------+

When the ``tree_method`` is set to ``hist``, internally, the

:py:class:`QuantileDMatrix` will be used instead of the :py:class:`DMatrix`

for conserving memory. However, this has performance implications when the

device of input data is not matched with algorithm. For instance, if the

input is a numpy array on CPU but ``cuda`` is used for training, then the

data is first processed on CPU then transferred to GPU.

y :

Labels

group :

Size of each query group of training data. Should have as many elements as

the query groups in the training data. If this is set to None, then user

must provide qid.

qid :

Query ID for each training sample. Should have the size of n_samples. If

this is set to None, then user must provide group or a special column in X.

sample_weight :

Query group weights

.. note:: Weights are per-group for ranking tasks

In ranking task, one weight is assigned to each query group/id (not each

data point). This is because we only care about the relative ordering of

data points within each group, so it doesn't make sense to assign

weights to individual data points.

base_margin :

Global bias for each instance.

eval_set :

A list of (X, y) tuple pairs to use as validation sets, for which

metrics will be computed.

Validation metrics will help us track the performance of the model.

eval_group :

A list in which ``eval_group[i]`` is the list containing the sizes of all

query groups in the ``i``-th pair in **eval_set**.

eval_qid :

A list in which ``eval_qid[i]`` is the array containing query ID of ``i``-th

pair in **eval_set**. The special column convention in `X` applies to

validation datasets as well.

eval_metric : str, list of str, optional

.. deprecated:: 1.6.0

use `eval_metric` in :py:meth:`__init__` or :py:meth:`set_params` instead.

early_stopping_rounds : int

.. deprecated:: 1.6.0

use `early_stopping_rounds` in :py:meth:`__init__` or

:py:meth:`set_params` instead.

verbose :

If `verbose` is True and an evaluation set is used, the evaluation metric

measured on the validation set is printed to stdout at each boosting stage.

If `verbose` is an integer, the evaluation metric is printed at each

`verbose` boosting stage. The last boosting stage / the boosting stage found

by using `early_stopping_rounds` is also printed.

xgb_model :

file name of stored XGBoost model or 'Booster' instance XGBoost model to be

loaded before training (allows training continuation).

sample_weight_eval_set :

A list of the form [L_1, L_2, ..., L_n], where each L_i is a list of

group weights on the i-th validation set.

.. note:: Weights are per-group for ranking tasks

In ranking task, one weight is assigned to each query group (not each

data point). This is because we only care about the relative ordering of

data points within each group, so it doesn't make sense to assign

weights to individual data points.

base_margin_eval_set :

A list of the form [M_1, M_2, ..., M_n], where each M_i is an array like

object storing base margin for the i-th validation set.

feature_weights :

Weight for each feature, defines the probability of each feature being

selected when colsample is being used. All values must be greater than 0,

otherwise a `ValueError` is thrown.

callbacks :

.. deprecated:: 1.6.0

Use `callbacks` in :py:meth:`__init__` or :py:meth:`set_params` instead.

Reimplemented from xgboost.sklearn.XGBModel.

◆ predict()

| ArrayLike xgboost.sklearn.XGBRanker.predict | ( | self, | |

| ArrayLike | X, | ||

| bool | output_margin = False, |

||

| bool | validate_features = True, |

||

| Optional[ArrayLike] | base_margin = None, |

||

| Optional[Tuple[int, int]] | iteration_range = None |

||

| ) |

Predict with `X`. If the model is trained with early stopping, then

:py:attr:`best_iteration` is used automatically. The estimator uses

`inplace_predict` by default and falls back to using :py:class:`DMatrix` if

devices between the data and the estimator don't match.

.. note:: This function is only thread safe for `gbtree` and `dart`.

Parameters

----------

X :

Data to predict with.

output_margin :

Whether to output the raw untransformed margin value.

validate_features :

When this is True, validate that the Booster's and data's feature_names are

identical. Otherwise, it is assumed that the feature_names are the same.

base_margin :

Margin added to prediction.

iteration_range :

Specifies which layer of trees are used in prediction. For example, if a

random forest is trained with 100 rounds. Specifying ``iteration_range=(10,

20)``, then only the forests built during [10, 20) (half open set) rounds

are used in this prediction.

.. versionadded:: 1.4.0

Returns

-------

prediction

Reimplemented from xgboost.sklearn.XGBModel.

◆ score()

| float xgboost.sklearn.XGBRanker.score | ( | self, | |

| ArrayLike | X, | ||

| ArrayLike | y | ||

| ) |

Evaluate score for data using the last evaluation metric. If the model is trained with early stopping, then :py:attr:`best_iteration` is used automatically. Parameters ---------- X : Union[pd.DataFrame, cudf.DataFrame] Feature matrix. A DataFrame with a special `qid` column. y : Labels Returns ------- score : The result of the first evaluation metric for the ranker.

The documentation for this class was generated from the following file:

- External/xgboost/python-package/xgboost/sklearn.py