|

| int | init (map< string, string > &map) |

| | extra param for use when debugging

|

| |

| virtual int | version () const |

| | Relevant for serializations.

|

| |

| virtual string | my_class_name () const |

| | For better handling of serializations it is highly recommended that each SerializableObject inheriting class will implement the next method.

|

| |

| virtual void | serialized_fields_name (vector< string > &field_names) const |

| | The names of the serialized fields.

|

| |

| virtual void * | new_polymorphic (string derived_name) |

| | for polymorphic classes that want to be able to serialize/deserialize a pointer * to the derived class given its type one needs to implement this function to return a new to the derived class given its type (as in my_type)

|

| |

|

virtual void | pre_serialization () |

| |

|

virtual void | post_deserialization () |

| |

| virtual size_t | get_size () |

| | Gets bytes sizes for serializations.

|

| |

| virtual size_t | serialize (unsigned char *blob) |

| | Serialiazing object to blob memory. return number ob bytes wrote to memory.

|

| |

| virtual size_t | deserialize (unsigned char *blob) |

| | Deserialiazing blob to object. returns number of bytes read.

|

| |

|

size_t | serialize_vec (vector< unsigned char > &blob) |

| |

|

size_t | deserialize_vec (vector< unsigned char > &blob) |

| |

|

virtual size_t | serialize (vector< unsigned char > &blob) |

| |

|

virtual size_t | deserialize (vector< unsigned char > &blob) |

| |

|

virtual int | read_from_file (const string &fname) |

| | read and deserialize model

|

| |

|

virtual int | write_to_file (const string &fname) |

| | serialize model and write to file

|

| |

|

virtual int | read_from_file_unsafe (const string &fname) |

| | read and deserialize model without checking version number - unsafe read

|

| |

|

int | init_from_string (string init_string) |

| | Init from string.

|

| |

|

int | init_params_from_file (string init_file) |

| |

|

int | init_param_from_file (string file_str, string ¶m) |

| |

|

int | update_from_string (const string &init_string) |

| |

| virtual int | update (map< string, string > &map) |

| | Virtual to update object from parsed fields.

|

| |

|

virtual string | object_json () const |

| |

|

|

string | init_string = "" |

| |

|

string | samples_time_unit = "Date" |

| | sometimes it helps to keep it for debugging

|

| |

|

int | samples_time_unit_i |

| |

|

int | ncateg = 2 |

| | calculated upon init

|

| |

|

string | time_slice_unit = "Days" |

| | number of categories (1 for regression)

|

| |

|

int | time_slice_unit_i |

| |

|

int | time_slice_size = -1 |

| | calculated upon init

|

| |

|

int | n_time_slices = 1 |

| | the size of the basic time slice, -1: is like infinity: a single time slice like a regular QRF

|

| |

|

vector< int > | time_slices = {} |

| | if time_slices vector is not given, one will be created using time_slice_size and this parameter.

|

| |

|

vector< float > | time_slices_wgts ={} |

| | if not empty: defines the borders of all the time lines. Enables a very flexible time slicing strategy

|

| |

|

int | censor_cases = 0 |

| | default is all 1.0 , but can be assigned by the user, will be used to weight the scores from different time windows

|

| |

|

int | max_q = 200 |

| | when calclating the time slices distributions we have an option to NOT count the preciding 0's of non 0 cases.

|

| |

|

string | tree_type = "" |

| | maximal quantization

|

| |

|

int | tree_type_i = -1 |

| | options: regression, entropy, logrank

|

| |

|

int | ntrees = 50 |

| | tree type code : calulated from tree_type the string

|

| |

|

int | max_depth = 100 |

| | number of trees to learn

|

| |

|

int | min_node_last_slice = 10 |

| | maximal depth of tree

|

| |

|

int | min_node = 10 |

| | stopping criteria : minimal number of samples in a node in the last time slice

|

| |

|

float | random_split_prob = 0 |

| | stopping criteria : minimal number of samples in a node in the first time slice

|

| |

|

int | ntry = -1 |

| | at this probability we will split a node in a random manner, in order to add noise to the tree.

|

| |

|

float | ntry_prob = (float)0.1 |

| | -1: use the ntry_prob rule, > 0 : choose this number of features.

|

| |

|

int | nsplits = -1 |

| | choose ntry_prob * nfeat features each time

|

| |

|

int | max_node_test_samples = 50000 |

| | -1: check all splits for each feature , then split the max, > 0: choose this number of split points at random and choose best

|

| |

|

int | single_sample_per_pid = 1 |

| | when a node is bigger than this number : choose this number of random samples to make decisions

|

| |

|

int | bag_with_repeats = 1 |

| | when bagging select a single sample per pid (which in itself can be repeated)

|

| |

|

float | bag_prob = (float)0.5 |

| | weather to bag with repeats or not

|

| |

|

float | bag_ratio = -1 |

| | random choice of samples for each tree prob

|

| |

|

float | bag_feat = (float)1.0 |

| | control ratio of #0 : #NonZero of labels, if < -1 , leave as is.

|

| |

|

int | qpoints_per_split = 0 |

| | proportion of random features chosen for each tree

|

| |

|

int | nvals_for_categorial = 0 |

| | if > 0 : will only choose this random number of points to test split points at, otherwise will test all of them

|

| |

|

vector< string > | categorial_str |

| | features with number of different values below nvals_for_categ will be assumed categorial

|

| |

|

vector< string > | categorial_tags |

| | all features containing one of the strings defined here in their name will be assumed categorial

|

| |

|

float | missing_val = MED_MAT_MISSING_VALUE |

| | all features containing these tags will be assumed categorial

|

| |

|

string | missing_method_str = "median" |

| | missing value

|

| |

|

int | missing_method = -1 |

| | how to handle missing values: median , left, right, mean, rand

|

| |

|

int | test_for_inf = 1 |

| | to be initialized from missing_method_str

|

| |

|

int | test_for_missing = 0 |

| | will fail on non finite values in input data

|

| |

|

int | only_this_categ = -1 |

| | will fail if missing value found in data

|

| |

|

int | predict_from_slice = -1 |

| | relavant only to categorial predictions: -1: give all categs, 0 and above: give only those categs remember that currently 0 is a special category in TQRF : the control category (nothing happens, healthy, etc...)

|

| |

|

int | predict_to_slice = -1 |

| | will give predictions for slices [predict_from_slice,...,predict_to_slice]. if negative: all slices.

|

| |

|

int | predict_sum_times = 0 |

| |

|

float | case_wgt = 1 |

| | will sum predictions over different times

|

| |

|

int | nrounds = 1 |

| | the weight to use for cases with y!=0 in a weighted case

|

| |

|

float | min_p = (float)0.01 |

| | a single round means simply running TQRF as defined with no boosting applied

|

| |

|

float | max_p = (float)0.99 |

| | minimal probability to trim to when recalculating weights

|

| |

|

float | alpha = 1 |

| | maximal probability to trip to when recalculating weights

|

| |

|

float | wgts_pow = 2 |

| | shrinkage factor

|

| |

|

float | tuning_size = 0 |

| | power for the pow(-log(p), wgts_pow) used for adaboost weights

|

| |

|

int | tune_max_depth = 0 |

| | size of group to tune tree weights by.

|

| |

|

int | tune_min_node_size = 0 |

| | max depth of a node to get a weight for. 0 means 1 weight per tree.

|

| |

|

float | gd_rate = (float)0.01 |

| | min node size for a node to have a weight

|

| |

|

int | gd_batch = 1000 |

| | gradient descent step size

|

| |

|

float | gd_momentum = (float)0.95 |

| | gradient descent batch size

|

| |

|

float | gd_lambda = 0 |

| | gradient descent momentum

|

| |

|

int | gd_epochs = 0 |

| | regularization

|

| |

|

int | verbosity = 0 |

| | 0 : stop automatically , Otherwise: do this number of epochs

|

| |

|

int | ids_to_print = 30 |

| | for debug prints

|

| |

|

int | debug = 0 |

| | control debug prints in certain places

|

| |

|

vector< double > | log_table |

| |

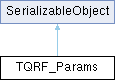

Public Member Functions inherited from SerializableObject

Public Member Functions inherited from SerializableObject