|

| Any | predict (self, _DataT X, bool output_margin=False, bool validate_features=True, Optional[_DaskCollection] base_margin=None, Optional[Tuple[int, int]] iteration_range=None) |

| |

| Any | apply (self, _DataT X, Optional[Tuple[int, int]] iteration_range=None) |

| |

|

Awaitable[Any] | __await__ (self) |

| |

|

Dict | __getstate__ (self) |

| |

| "distributed.Client" | client (self) |

| |

|

None | client (self, "distributed.Client" clt) |

| |

|

None | __init__ (self, Optional[int] max_depth=None, Optional[int] max_leaves=None, Optional[int] max_bin=None, Optional[str] grow_policy=None, Optional[float] learning_rate=None, Optional[int] n_estimators=None, Optional[int] verbosity=None, SklObjective objective=None, Optional[str] booster=None, Optional[str] tree_method=None, Optional[int] n_jobs=None, Optional[float] gamma=None, Optional[float] min_child_weight=None, Optional[float] max_delta_step=None, Optional[float] subsample=None, Optional[str] sampling_method=None, Optional[float] colsample_bytree=None, Optional[float] colsample_bylevel=None, Optional[float] colsample_bynode=None, Optional[float] reg_alpha=None, Optional[float] reg_lambda=None, Optional[float] scale_pos_weight=None, Optional[float] base_score=None, Optional[Union[np.random.RandomState, int]] random_state=None, float missing=np.nan, Optional[int] num_parallel_tree=None, Optional[Union[Dict[str, int], str]] monotone_constraints=None, Optional[Union[str, Sequence[Sequence[str]]]] interaction_constraints=None, Optional[str] importance_type=None, Optional[str] device=None, Optional[bool] validate_parameters=None, bool enable_categorical=False, Optional[FeatureTypes] feature_types=None, Optional[int] max_cat_to_onehot=None, Optional[int] max_cat_threshold=None, Optional[str] multi_strategy=None, Optional[Union[str, List[str], Callable]] eval_metric=None, Optional[int] early_stopping_rounds=None, Optional[List[TrainingCallback]] callbacks=None, **Any kwargs) |

| |

|

bool | __sklearn_is_fitted__ (self) |

| |

| Booster | get_booster (self) |

| |

| "XGBModel" | set_params (self, **Any params) |

| |

| Dict[str, Any] | get_params (self, bool deep=True) |

| |

| Dict[str, Any] | get_xgb_params (self) |

| |

| int | get_num_boosting_rounds (self) |

| |

|

None | save_model (self, Union[str, os.PathLike] fname) |

| |

|

None | load_model (self, ModelIn fname) |

| |

| "XGBModel" | fit (self, ArrayLike X, ArrayLike y, *Optional[ArrayLike] sample_weight=None, Optional[ArrayLike] base_margin=None, Optional[Sequence[Tuple[ArrayLike, ArrayLike]]] eval_set=None, Optional[Union[str, Sequence[str], Metric]] eval_metric=None, Optional[int] early_stopping_rounds=None, Optional[Union[bool, int]] verbose=True, Optional[Union[Booster, str, "XGBModel"]] xgb_model=None, Optional[Sequence[ArrayLike]] sample_weight_eval_set=None, Optional[Sequence[ArrayLike]] base_margin_eval_set=None, Optional[ArrayLike] feature_weights=None, Optional[Sequence[TrainingCallback]] callbacks=None) |

| |

| Dict[str, Dict[str, List[float]]] | evals_result (self) |

| |

| int | n_features_in_ (self) |

| |

| np.ndarray | feature_names_in_ (self) |

| |

| float | best_score (self) |

| |

| int | best_iteration (self) |

| |

| np.ndarray | feature_importances_ (self) |

| |

| np.ndarray | coef_ (self) |

| |

| np.ndarray | intercept_ (self) |

| |

|

|

Any | _predict_async (self, _DataT data, bool output_margin, bool validate_features, Optional[_DaskCollection] base_margin, Optional[Tuple[int, int]] iteration_range) |

| |

|

Any | _apply_async (self, _DataT X, Optional[Tuple[int, int]] iteration_range=None) |

| |

| Any | _client_sync (self, Callable func, **Any kwargs) |

| |

| Dict[str, bool] | _more_tags (self) |

| |

|

str | _get_type (self) |

| |

| None | _load_model_attributes (self, dict config) |

| |

| Tuple[ Optional[Union[Booster, str, "XGBModel"]], Optional[Metric], Dict[str, Any], Optional[int], Optional[Sequence[TrainingCallback]],] | _configure_fit (self, Optional[Union[Booster, "XGBModel", str]] booster, Optional[Union[Callable, str, Sequence[str]]] eval_metric, Dict[str, Any] params, Optional[int] early_stopping_rounds, Optional[Sequence[TrainingCallback]] callbacks) |

| |

|

DMatrix | _create_dmatrix (self, Optional[DMatrix] ref, **Any kwargs) |

| |

|

None | _set_evaluation_result (self, TrainingCallback.EvalsLog evals_result) |

| |

|

bool | _can_use_inplace_predict (self) |

| |

|

Tuple[int, int] | _get_iteration_range (self, Optional[Tuple[int, int]] iteration_range) |

| |

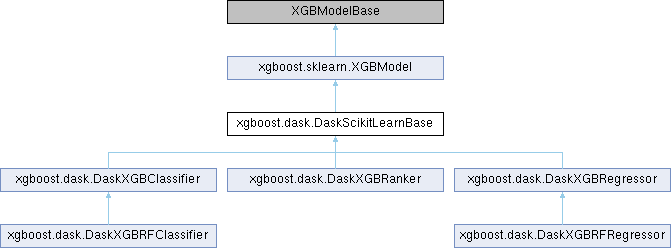

Base class for implementing scikit-learn interface with Dask

| Any xgboost.dask.DaskScikitLearnBase.apply |

( |

|

self, |

|

|

_DataT |

X, |

|

|

Optional[Tuple[int, int]] |

iteration_range = None |

|

) |

| |

Return the predicted leaf every tree for each sample. If the model is trained

with early stopping, then :py:attr:`best_iteration` is used automatically.

Parameters

----------

X : array_like, shape=[n_samples, n_features]

Input features matrix.

iteration_range :

See :py:meth:`predict`.

Returns

-------

X_leaves : array_like, shape=[n_samples, n_trees]

For each datapoint x in X and for each tree, return the index of the

leaf x ends up in. Leaves are numbered within

``[0; 2**(self.max_depth+1))``, possibly with gaps in the numbering.

Reimplemented from xgboost.sklearn.XGBModel.

| Any xgboost.dask.DaskScikitLearnBase.predict |

( |

|

self, |

|

|

_DataT |

X, |

|

|

bool |

output_margin = False, |

|

|

bool |

validate_features = True, |

|

|

Optional[_DaskCollection] |

base_margin = None, |

|

|

Optional[Tuple[int, int]] |

iteration_range = None |

|

) |

| |

Predict with `X`. If the model is trained with early stopping, then

:py:attr:`best_iteration` is used automatically. The estimator uses

`inplace_predict` by default and falls back to using :py:class:`DMatrix` if

devices between the data and the estimator don't match.

.. note:: This function is only thread safe for `gbtree` and `dart`.

Parameters

----------

X :

Data to predict with.

output_margin :

Whether to output the raw untransformed margin value.

validate_features :

When this is True, validate that the Booster's and data's feature_names are

identical. Otherwise, it is assumed that the feature_names are the same.

base_margin :

Margin added to prediction.

iteration_range :

Specifies which layer of trees are used in prediction. For example, if a

random forest is trained with 100 rounds. Specifying ``iteration_range=(10,

20)``, then only the forests built during [10, 20) (half open set) rounds

are used in this prediction.

.. versionadded:: 1.4.0

Returns

-------

prediction

Reimplemented from xgboost.sklearn.XGBModel.

Public Member Functions inherited from xgboost.sklearn.XGBModel

Public Member Functions inherited from xgboost.sklearn.XGBModel Data Fields inherited from xgboost.sklearn.XGBModel

Data Fields inherited from xgboost.sklearn.XGBModel Protected Member Functions inherited from xgboost.sklearn.XGBModel

Protected Member Functions inherited from xgboost.sklearn.XGBModel Protected Attributes inherited from xgboost.sklearn.XGBModel

Protected Attributes inherited from xgboost.sklearn.XGBModel