|

| None | __init__ (self, DataType data, Optional[ArrayLike] label=None, *Optional[ArrayLike] weight=None, Optional[ArrayLike] base_margin=None, Optional[float] missing=None, bool silent=False, Optional[FeatureNames] feature_names=None, Optional[FeatureTypes] feature_types=None, Optional[int] nthread=None, Optional[int] max_bin=None, Optional[DMatrix] ref=None, Optional[ArrayLike] group=None, Optional[ArrayLike] qid=None, Optional[ArrayLike] label_lower_bound=None, Optional[ArrayLike] label_upper_bound=None, Optional[ArrayLike] feature_weights=None, bool enable_categorical=False, DataSplitMode data_split_mode=DataSplitMode.ROW) |

| |

|

None | __del__ (self) |

| |

| None | set_info (self, *Optional[ArrayLike] label=None, Optional[ArrayLike] weight=None, Optional[ArrayLike] base_margin=None, Optional[ArrayLike] group=None, Optional[ArrayLike] qid=None, Optional[ArrayLike] label_lower_bound=None, Optional[ArrayLike] label_upper_bound=None, Optional[FeatureNames] feature_names=None, Optional[FeatureTypes] feature_types=None, Optional[ArrayLike] feature_weights=None) |

| |

| np.ndarray | get_float_info (self, str field) |

| |

| np.ndarray | get_uint_info (self, str field) |

| |

| None | set_float_info (self, str field, ArrayLike data) |

| |

| None | set_float_info_npy2d (self, str field, ArrayLike data) |

| |

| None | set_uint_info (self, str field, ArrayLike data) |

| |

| None | save_binary (self, Union[str, os.PathLike] fname, bool silent=True) |

| |

| None | set_label (self, ArrayLike label) |

| |

| None | set_weight (self, ArrayLike weight) |

| |

| None | set_base_margin (self, ArrayLike margin) |

| |

| None | set_group (self, ArrayLike group) |

| |

| np.ndarray | get_label (self) |

| |

| np.ndarray | get_weight (self) |

| |

| np.ndarray | get_base_margin (self) |

| |

| np.ndarray | get_group (self) |

| |

| scipy.sparse.csr_matrix | get_data (self) |

| |

| Tuple[np.ndarray, np.ndarray] | get_quantile_cut (self) |

| |

| int | num_row (self) |

| |

| int | num_col (self) |

| |

| int | num_nonmissing (self) |

| |

| "DMatrix" | slice (self, Union[List[int], np.ndarray] rindex, bool allow_groups=False) |

| |

| Optional[FeatureNames] | feature_names (self) |

| |

|

None | feature_names (self, Optional[FeatureNames] feature_names) |

| |

| Optional[FeatureTypes] | feature_types (self) |

| |

|

None | feature_types (self, Optional[FeatureTypes] feature_types) |

| |

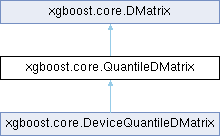

A DMatrix variant that generates quantilized data directly from input for the

``hist`` tree method. This DMatrix is primarily designed to save memory in training

by avoiding intermediate storage. Set ``max_bin`` to control the number of bins

during quantisation, which should be consistent with the training parameter

``max_bin``. When ``QuantileDMatrix`` is used for validation/test dataset, ``ref``

should be another ``QuantileDMatrix``(or ``DMatrix``, but not recommended as it

defeats the purpose of saving memory) constructed from training dataset. See

:py:obj:`xgboost.DMatrix` for documents on meta info.

.. note::

Do not use ``QuantileDMatrix`` as validation/test dataset without supplying a

reference (the training dataset) ``QuantileDMatrix`` using ``ref`` as some

information may be lost in quantisation.

.. versionadded:: 1.7.0

Parameters

----------

max_bin :

The number of histogram bin, should be consistent with the training parameter

``max_bin``.

ref :

The training dataset that provides quantile information, needed when creating

validation/test dataset with ``QuantileDMatrix``. Supplying the training DMatrix

as a reference means that the same quantisation applied to the training data is

applied to the validation/test data

| None xgboost.core.QuantileDMatrix.__init__ |

( |

|

self, |

|

|

DataType |

data, |

|

|

Optional[ArrayLike] |

label = None, |

|

|

*Optional[ArrayLike] |

weight = None, |

|

|

Optional[ArrayLike] |

base_margin = None, |

|

|

Optional[float] |

missing = None, |

|

|

bool |

silent = False, |

|

|

Optional[FeatureNames] |

feature_names = None, |

|

|

Optional[FeatureTypes] |

feature_types = None, |

|

|

Optional[int] |

nthread = None, |

|

|

Optional[int] |

group = None, |

|

|

Optional[DMatrix] |

qid = None, |

|

|

Optional[ArrayLike] |

label_lower_bound = None, |

|

|

Optional[ArrayLike] |

label_upper_bound = None, |

|

|

Optional[ArrayLike] |

feature_weights = None, |

|

|

Optional[ArrayLike] |

enable_categorical = None, |

|

|

Optional[ArrayLike] |

data_split_mode = None, |

|

|

bool |

enable_categorical = False, |

|

|

DataSplitMode |

data_split_mode = DataSplitMode.ROW |

|

) |

| |

Parameters

----------

data :

Data source of DMatrix. See :ref:`py-data` for a list of supported input

types.

label :

Label of the training data.

weight :

Weight for each instance.

.. note::

For ranking task, weights are per-group. In ranking task, one weight

is assigned to each group (not each data point). This is because we

only care about the relative ordering of data points within each group,

so it doesn't make sense to assign weights to individual data points.

base_margin :

Base margin used for boosting from existing model.

missing :

Value in the input data which needs to be present as a missing value. If

None, defaults to np.nan.

silent :

Whether print messages during construction

feature_names :

Set names for features.

feature_types :

Set types for features. When `enable_categorical` is set to `True`, string

"c" represents categorical data type while "q" represents numerical feature

type. For categorical features, the input is assumed to be preprocessed and

encoded by the users. The encoding can be done via

:py:class:`sklearn.preprocessing.OrdinalEncoder` or pandas dataframe

`.cat.codes` method. This is useful when users want to specify categorical

features without having to construct a dataframe as input.

nthread :

Number of threads to use for loading data when parallelization is

applicable. If -1, uses maximum threads available on the system.

group :

Group size for all ranking group.

qid :

Query ID for data samples, used for ranking.

label_lower_bound :

Lower bound for survival training.

label_upper_bound :

Upper bound for survival training.

feature_weights :

Set feature weights for column sampling.

enable_categorical :

.. versionadded:: 1.3.0

.. note:: This parameter is experimental

Experimental support of specializing for categorical features. Do not set

to True unless you are interested in development. Also, JSON/UBJSON

serialization format is required.

Reimplemented from xgboost.core.DMatrix.

Reimplemented in xgboost.core.DeviceQuantileDMatrix.

Public Member Functions inherited from xgboost.core.DMatrix

Public Member Functions inherited from xgboost.core.DMatrix Data Fields inherited from xgboost.core.DMatrix

Data Fields inherited from xgboost.core.DMatrix Protected Member Functions inherited from xgboost.core.DMatrix

Protected Member Functions inherited from xgboost.core.DMatrix