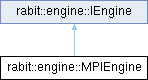

implementation of engine using MPI More...

Public Member Functions | |

| void | Allgather (void *sendrecvbuf_, size_t total_size, size_t slice_begin, size_t slice_end, size_t size_prev_slice) override |

| Allgather function, each node have a segment of data in the ring of sendrecvbuf, the data provided by current node k is [slice_begin, slice_end), the next node's segment must start with slice_end after the call of Allgather, sendrecvbuf_ contains all the contents including all segments use a ring based algorithm. | |

| void | Allreduce (void *sendrecvbuf_, size_t type_nbytes, size_t count, ReduceFunction reducer, PreprocFunction prepare_fun, void *prepare_arg) override |

| performs in-place Allreduce, on sendrecvbuf this function is NOT thread-safe | |

| int | GetRingPrevRank (void) const override |

| gets rank of previous node in ring topology | |

| void | Broadcast (void *sendrecvbuf_, size_t size, int root) override |

| broadcasts data from root to every other node | |

| virtual void | InitAfterException (void) |

| virtual int | LoadCheckPoint (Serializable *global_model, Serializable *local_model=NULL) |

| virtual void | CheckPoint (const Serializable *global_model, const Serializable *local_model=NULL) |

| virtual void | LazyCheckPoint (const Serializable *global_model) |

| virtual int | VersionNumber (void) const |

| virtual int | GetRank (void) const |

| get rank of current node | |

| virtual int | GetWorldSize (void) const |

| get total number of | |

| virtual bool | IsDistributed (void) const |

| whether it is distributed | |

| virtual std::string | GetHost (void) const |

| get the host name of current node | |

| virtual void | TrackerPrint (const std::string &msg) |

| prints the msg in the tracker, this function can be used to communicate progress information to the user who monitors the tracker | |

Public Member Functions inherited from rabit::engine::IEngine Public Member Functions inherited from rabit::engine::IEngine | |

| ~IEngine ()=default | |

| virtual destructor | |

| virtual int | LoadCheckPoint ()=0 |

| virtual void | CheckPoint ()=0 |

| Increase internal version number. Deprecated. | |

Additional Inherited Members | |

Public Types inherited from rabit::engine::IEngine Public Types inherited from rabit::engine::IEngine | |

| typedef void() | PreprocFunction(void *arg) |

| Preprocessing function, that is called before AllReduce, used to prepare the data used by AllReduce. | |

| typedef void() | ReduceFunction(const void *src, void *dst, int count, const MPI::Datatype &dtype) |

| reduce function, the same form of MPI reduce function is used, to be compatible with MPI interface In all the functions, the memory is ensured to aligned to 64-bit which means it is OK to cast src,dst to double* int* etc | |

Detailed Description

implementation of engine using MPI

Member Function Documentation

◆ Allgather()

|

inlineoverridevirtual |

Allgather function, each node have a segment of data in the ring of sendrecvbuf, the data provided by current node k is [slice_begin, slice_end), the next node's segment must start with slice_end after the call of Allgather, sendrecvbuf_ contains all the contents including all segments use a ring based algorithm.

- Parameters

-

sendrecvbuf_ buffer for both sending and receiving data, it is a ring conceptually total_size total size of data to be gathered slice_begin beginning of the current slice slice_end end of the current slice size_prev_slice size of the previous slice i.e. slice of node (rank - 1) % world_size

Implements rabit::engine::IEngine.

◆ Allreduce()

|

inlineoverridevirtual |

performs in-place Allreduce, on sendrecvbuf this function is NOT thread-safe

- Parameters

-

sendrecvbuf_ buffer for both sending and receiving data type_nbytes the number of bytes the type has count number of elements to be reduced reducer reduce function prepare_func Lazy preprocessing function, if it is not NULL, prepare_fun(prepare_arg) will be called by the function before performing Allreduce in order to initialize the data in sendrecvbuf. If the result of Allreduce can be recovered directly, then prepare_func will NOT be called prepare_arg argument used to pass into the lazy preprocessing function

Implements rabit::engine::IEngine.

◆ Broadcast()

|

inlineoverridevirtual |

broadcasts data from root to every other node

- Parameters

-

sendrecvbuf_ buffer for both sending and receiving data size the size of the data to be broadcasted root the root worker id to broadcast the data

Implements rabit::engine::IEngine.

◆ GetHost()

|

inlinevirtual |

get the host name of current node

Implements rabit::engine::IEngine.

◆ GetRank()

|

inlinevirtual |

get rank of current node

Implements rabit::engine::IEngine.

◆ GetRingPrevRank()

|

inlineoverridevirtual |

gets rank of previous node in ring topology

Implements rabit::engine::IEngine.

◆ GetWorldSize()

|

inlinevirtual |

get total number of

Implements rabit::engine::IEngine.

◆ IsDistributed()

|

inlinevirtual |

whether it is distributed

Implements rabit::engine::IEngine.

◆ TrackerPrint()

|

inlinevirtual |

prints the msg in the tracker, this function can be used to communicate progress information to the user who monitors the tracker

- Parameters

-

msg message to be printed in the tracker

Implements rabit::engine::IEngine.

◆ VersionNumber()

|

inlinevirtual |

- Returns

- version number of the current stored model, which means how many calls to CheckPoint we made so far

- See also

- LoadCheckPoint, CheckPoint

Implements rabit::engine::IEngine.

The documentation for this class was generated from the following file:

- External/xgboost/rabit/src/engine_mpi.cc