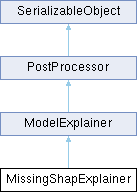

Shapely Explainer - Based on learning training data to handle missing_values as "correct" input. More...

#include <ExplainWrapper.h>

Public Member Functions | |

| void | _learn (const MedFeatures &train_mat) |

| overload function for ModelExplainer - easier API | |

| void | explain (const MedFeatures &matrix, vector< map< string, float > > &sample_explain_reasons) const |

| Virtual - return explain results in sample_feature_contrib. | |

Public Member Functions inherited from ModelExplainer Public Member Functions inherited from ModelExplainer | |

| virtual int | init (map< string, string > &mapper) |

| Global init for general args in all explainers. initialize directly all args in GlobalExplainerParams. | |

| virtual int | update (map< string, string > &mapper) |

| Virtual to update object from parsed fields. | |

| virtual void | Learn (const MedFeatures &train_mat) |

| Learns from predictor and train_matrix (PostProcessor API) | |

| void | Apply (MedFeatures &matrix) |

| alias for explain | |

| void | get_input_fields (vector< Effected_Field > &fields) const |

| List of fields that are used by this post_processor. | |

| void | get_output_fields (vector< Effected_Field > &fields) const |

| List of fields that are being effected by this post_processor. | |

| void | init_post_processor (MedModel &model) |

| Init ModelExplainer from MedModel - copies predictor pointer, might save normalizers pointers. | |

| virtual void | explain (MedFeatures &matrix) const |

| Stores explain results in matrix. | |

| void | dprint (const string &pref) const |

Public Member Functions inherited from PostProcessor Public Member Functions inherited from PostProcessor | |

| void * | new_polymorphic (string dname) |

| for polymorphic classes that want to be able to serialize/deserialize a pointer * to the derived class given its type one needs to implement this function to return a new to the derived class given its type (as in my_type) | |

| virtual float | get_use_p () |

| virtual int | get_use_split () |

Public Member Functions inherited from SerializableObject Public Member Functions inherited from SerializableObject | |

| virtual int | version () const |

| Relevant for serializations. | |

| virtual string | my_class_name () const |

| For better handling of serializations it is highly recommended that each SerializableObject inheriting class will implement the next method. | |

| virtual void | serialized_fields_name (vector< string > &field_names) const |

| The names of the serialized fields. | |

| virtual void | pre_serialization () |

| virtual void | post_deserialization () |

| virtual size_t | get_size () |

| Gets bytes sizes for serializations. | |

| virtual size_t | serialize (unsigned char *blob) |

| Serialiazing object to blob memory. return number ob bytes wrote to memory. | |

| virtual size_t | deserialize (unsigned char *blob) |

| Deserialiazing blob to object. returns number of bytes read. | |

| size_t | serialize_vec (vector< unsigned char > &blob) |

| size_t | deserialize_vec (vector< unsigned char > &blob) |

| virtual size_t | serialize (vector< unsigned char > &blob) |

| virtual size_t | deserialize (vector< unsigned char > &blob) |

| virtual int | read_from_file (const string &fname) |

| read and deserialize model | |

| virtual int | write_to_file (const string &fname) |

| serialize model and write to file | |

| virtual int | read_from_file_unsafe (const string &fname) |

| read and deserialize model without checking version number - unsafe read | |

| int | init_from_string (string init_string) |

| Init from string. | |

| int | init_params_from_file (string init_file) |

| int | init_param_from_file (string file_str, string ¶m) |

| int | update_from_string (const string &init_string) |

| virtual string | object_json () const |

Data Fields | |

| int | add_new_data |

| how many new data data points to add for train according to sample masks | |

| bool | no_relearn |

| If true will use original model without relearn. assume original model is good enough for missing vals (for example LM model) | |

| int | max_test |

| max number of samples in SHAP | |

| float | missing_value |

| missing value | |

| bool | sample_masks_with_repeats |

| Whether or not to sample masks with repeats. | |

| float | select_from_all |

| If max_test is beyond this percentage of all options than sample from all options (to speed up runtime) | |

| bool | uniform_rand |

| it True will sample masks uniformlly | |

| bool | use_shuffle |

| if not sampling uniformlly, If true will use shuffle (to speed up runtime) | |

| string | predictor_args |

| arguments to change in predictor - for example to change it into regression | |

| string | predictor_type |

| bool | verbose_learn |

| If true will print more in learn. | |

| string | verbose_apply |

| If has value - output file. | |

| float | max_weight |

| the maximal weight number. if < 0 no limit | |

| int | subsample_train |

| if not zero will use this to subsample original train sampels to this number | |

| int | limit_mask_size |

| if set will limit mask size in the train - usefull for minimal_set | |

| bool | use_minimal_set |

| If true will use different method to find minimal set. | |

| float | sort_params_a |

| weight for minimal distance from original score importance | |

| float | sort_params_b |

| weight for variance in prediction using imputation. the rest is change from prev | |

| float | sort_params_k1 |

| weight for minimal distance from original score importance | |

| float | sort_params_k2 |

| weight for variance in prediction using imputation. the rest is change from prev | |

| int | max_set_size |

| the size to look for to explain | |

| float | override_score_bias |

| when given will use it as score bias it train is very different from test | |

| float | split_to_test |

| to report RMSE on this ratio > 0 and < 1 | |

Data Fields inherited from ModelExplainer Data Fields inherited from ModelExplainer | |

| MedPredictor * | original_predictor = NULL |

| predictor we're trying to explain | |

| ExplainFilters | filters |

| general filters of results | |

| ExplainProcessings | processing |

| processing of results, like groupings, COV | |

| GlobalExplainerParams | global_explain_params |

Data Fields inherited from PostProcessor Data Fields inherited from PostProcessor | |

| PostProcessorTypes | processor_type = PostProcessorTypes::FTR_POSTPROCESS_LAST |

| int | use_split = -1 |

| float | use_p = 0.0 |

Additional Inherited Members | |

Static Public Member Functions inherited from ModelExplainer Static Public Member Functions inherited from ModelExplainer | |

| static void | print_explain (MedSample &smp, int sort_mode=0) |

Static Public Member Functions inherited from PostProcessor Static Public Member Functions inherited from PostProcessor | |

| static PostProcessor * | make_processor (const string &processor_name, const string ¶ms="") |

| static PostProcessor * | make_processor (PostProcessorTypes type, const string ¶ms="") |

| static PostProcessor * | create_processor (string ¶ms) |

Detailed Description

Shapely Explainer - Based on learning training data to handle missing_values as "correct" input.

so predicting on missing_values mask would give E[F(X)], whwn X has missing values, will allow much faster compution. All we need to do is reweight or manipulate missing_values(erase/add missing values) that each sample would look like: First we sample uniformally how many missing values should be - than randomally remove those value and set them as missing values. This will cause the weight of each count of missing values to be equal in train - same as weights in SHAP values calculation

Member Function Documentation

◆ _learn()

|

virtual |

overload function for ModelExplainer - easier API

Implements ModelExplainer.

◆ explain()

|

virtual |

Virtual - return explain results in sample_feature_contrib.

Implements ModelExplainer.

The documentation for this class was generated from the following files:

- Internal/MedProcessTools/MedProcessTools/ExplainWrapper.h

- Internal/MedProcessTools/MedProcessTools/ExplainWrapper.cpp